A Programmer's Odissey

#

IW: 2025-03-15

LU: 2025-03-18

LU: 2025-03-18

Long were the times punching cards was necessary to interact with a machine.

So long, in fact, we cannot even speak to anyone about it casually, not often enough, about

anything anyway.

Is it technology to blame, for our not-punching-cards and not-speaking-casually?

You might as well call it non-existing: the virtuality of being here, while not being here,

reading this, while only trudging your way through words until the next mental tick reminds

you to check whatever you were doing before, that you are also doing right now, and that

you should have done before starting to read this.

Time, as it once were, cannot be factually taken any longer, not even as networked messages

can be taken to briefly exist in a wire, so-long as they’re received or perceived by the

network.

The internet exists in an unforeseeable future of potentiality, messages

only arrive in the near future, while nothing can be present on a virtual medium,

not long enough for a future to consolidate in any natural or human way.

Posters are usually on a

high, addicted to the precocious reaction

of others, which in turn triggers their precocious response, and debate continues without

thought, powered by the anxiety-inducing instantaneity of the near-future, that won’t allow

full understanding as it requires

no reaction time in order to prove engagement.

If you are not engaged enough, you might even lose track of your own conversation.

Every conversation on the web is a group conversation. When it is not, it is a monologue,

almost always with oneself, as no Twitch chat can be as demanding as to require more than a

few seconds of attention.

So are you frustrated with the state of technology? Can you even see a better way to employ hardware for humanity?

If only I wasn’t working with this, then I would live better

– I hear you.

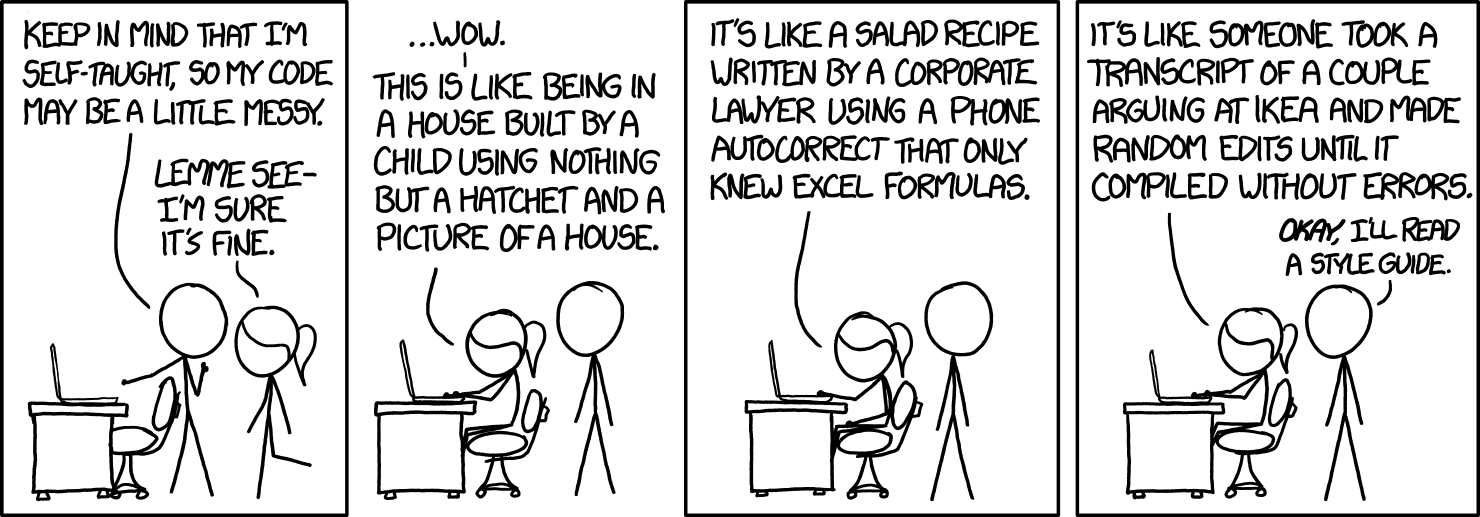

Maybe it is the case, and if you do have other talents that allow you to live well enough, go for it. Not having too many options is fine, actually. You might feel imprisoned by your frontend skills, or your lack of portfolio, but that can only become motivation to actually go and do it. Many people have done it before, and better than you, many more people will do their own versions which might or might not be better, so you should just do something, or anything. And if you don’t believe that software will ever get better, it might be time to improve your skills so that you can do what you believe would be better, or teach newer people about it to forward your hopes.

Hope, like a dream, exists as a practice. Unless you’re hoping for a chance event, you can actually improve your chances of succeeding, transforming the future somehow. Computers have existed for very little time. Most software has NOT been written, even vaguely well enough, to be considered atemporal or to have solved a problem once and for all. Take your favorite open-source software pieces and rip them apart, clone them and see through the bytes. If you aren’t willing to do that you are not ready for this revolution. Not understanding is part of understanding, until it isn’t, but that takes a long time, and you must get used to not understanding it fully. Understanding better always comes with practice, even for mathematicians, or other engineering fields, and in that way we are truly engineers.

Code can be like pain.

Track manager calls helper calls helper calls helper calls binary interface calls networked

code sends packet, arrives at a handler, it handles, and then it handles with a helper,

then you get state, then you propagate, then in the next loop you might see an error

because you have no idea what the previous loop was, and the mutable state could also be

changed somewhere else, so you must check references for

every shared variable.

But code can also be

not pain.

Track manager sends a play command through a gRPC interface,

arrives at the main loop which updates the player queue and redraws the UI using an async hook.

We often need abstractions to deal with our own ignorance, because we are not ready to understand what is happening, or we don’t want to see it because there’s too much detail. Detail is often better than non-detail in that it actually describes.

Abstractions hide

so they cannot possibly describe.

It is often not simply a matter of pure skill or philosophy: object-oriented and functional programmers alike will agree that shared mutable state can only cause headache. So then, why do we keep insisting on such patterns? Asynchronous programs have brought upon us a reality in which we cannot see the full time dimension anymore. We’re lost in non-timed slices of requests, communicating with our own services antagonistically through broken, incomplete, outdated, and untrusted data. Microservice-oriented architectures then commit gross transgressions separating all state and all operations still assuming that asynchronous services can be made manageable when they are like ant-colonies. If no one can see the fully Byzantine data transformations, not even after years of enterprise, what can their programs actually accomplish? Collaborative effort is tremendously hindered by our overwhelming focus on meaningless and unuseful contracts with ourselves. Unlike any Fordist model, it is simply, completely, and utterly ineffective to have such complicated designs for what is obviously an artist's craft.

It must be less painful to simply make the code you need, instead of what you think you need. If you ever felt anxious upon going to definition, then it is that which you want to avoid. There must be a stupidly obvious problem with your code when 90% of it needs to be abstracted or else it is repeated. Consider the case that such absurd complexity can only arise from stupid data layout.

Code can only be

painless in the humbling process of looking at the data;

Then you know you can bring forth your wants through the defyingly plain:

Unobtrusively manifest, impossibly clear, effortlessly built by arduous insight.

The magical code that does

exactly enough to perform the task at

hand and nothing else, in a way that defies incompetent readers to improve their

understanding, and delights the fluent programmer for its simplicity.

Better software is like poetry which illuminates how the problem can become trivial once

you truly understand it.

It is truly a wonder that code can be poetry when the data is simply there.

Elegancy should be our main aspiration, and if one cannot do it, it should suffice to

simply solve the problem at hand so as to

avoid pain.

Avoiding pain has to do with making your own life

easier.

It has nothing to do with omitting code with

thousand helpers.

Simplifying life has much more to do with organizing a room, or packing a suitcase.

Similarly, simplifying code comes naturally with organizing our understanding of data

transformations.

To make buildings one needs materials and technique.

Similarly, to make code, one needs data and algorithms.

No builder would spend most of their time planning for all possible renovations their

clients might want to do, that is simply an anxious worry if not expressed clearly, because

it is not a part of solving the actual problem.

Any sane service can only work as a collection of supporting parts, but more often than not you see programs fearful of their own dependencies: try-catch blocks dealing with obvious cases, or functions that receive hardcoded arguments only to perform illogical statements on what is already, statically, known. That can only happen if the programmer cannot see the consequences of their own actions. Defensive code has become standardized by distrustful stacks, as the nature of incomprehensibly complex networks becomes standard in every application. What kind of half-answer can anyone accomplish with half-solved mental models of what their code is actually doing? Does it only have to seem to solve the problem to convince human souls into perpetual bondage in maintainership? Are we bound to be slaves of these ghosts of the past? The true question lies in what we are trying to maintain, for maintaining is some sort of preservation. There is no other good preserved beyond encoded comprehension, thus software must be a book by which you can understand the problem at hand, not make-up to make your bullshit look pretty.

Long were the times thinking before inputting was necessary to interact with a machine.

So long, in fact, that you might not even need think to interact with another human being

across your terminal screen.

What makes you think outside the machine?

Computer is our lens, and all computation is, for all matters, real and true.

So by learning to compute properly, you might as well learn to think through the machine -- as a

“bicycle for the mind”, as our friend Jobs put it -- as you can use it, as we’re collectively

not using it.

Frivolosly typing doubled logic, setting a variable to null to put it through a function

that checks the condition at every step, only to return a default at the end, forever

available, but you just couldn’t see further enough, blinded by our own

omission.

To see a constant as a constant is just the same as looking at the sky and seeing the stars

for what they are.

To pretend stars are inconstant only because you know sometimes they might go

supernova, maybe in a hundred thousand years, is just a blinding assumption for any

subject not pertaining to astrophysics simulation.

In order to manipulate programs effectively, you must free your operations from the actual

memory lifetime as much as possible.

It is imperative to separate data from operations, so as to free them for reuse, and

for partial reuse of partial data without deep nesting, whatever nesting even means

empirically.

One only needs a field that has another field that has itself and other fields that have

itself because they failed to untangle their own

understanding,

not because the problem requires tangling.

Avoiding shared mutable state can also be seen as avoiding its dispersal.

Any well understood problem can be expressed in many ways, and it is the programmer’s task to find one mere statement simple enough for the application at hand. So much of what could be simple programs turn into unmanageable behemoths. The magic in a main loop is destroyed by callbacks and hooks, all in favor of what seemed right, or superficial statements about stellar physics. Main loops may not solve every problem in themselves, but it is nonetheless one truth about programs. And that every program must have some kind of entry-point also defines its life. Why are we so desperately trying to forget about it, and to make our programs live in non-time, as internet daemons or mere logs of what we could not trace?

Deep as we are, there’s still something about the nature of programs as nature. Not actual nature, not even human nature, but a human-made nature of our systems. And as we deal with them, not having the tools to understand or analyse them, we are swindled by their facticity as inert parts of the machine, meanwhile they are simply code, just like the ones we create ourselves. We can be reminded of this because they are flawed, and so we want fixes, updates, better! However, any truly useful and longevous system is understood as a legacy system by those who work with it. Legacy is either not-understood, or not-competing with the latest understanding, or even both. It may well be that someone or a team’s understanding of the program has been improved, but the program does not reflect it. Or the people who understand the program, alone, could never hope to rewrite it, because even they cannot understand the legacy fully either, full of obscure detail and tales of past glory. Isn’t this the same feeling we have towards historic institutions? Semi-understood, we think we know better, yet no one can actually change anything because the actual thing is truly beyond comprehension. Such is the tragedy of our comprehension.

Take tragedy as study, and probe your own limits.

Why not spend a weekend smashing some legacy and see whether you can actually make a small

garden where you want to live?

Not caring is important for moving forward, but grasping our transformations for what they

truly are may lead us into new possibilities.

Just like we know what a tree is, not after seeing one tree, but after recognizing way too

many trees.

You should be

attentive enough to notice rewriting the same logic

for the third or the fifth time, or to lookup references of what you are trying to

accomplish just to find it is already implemented elsewhere.

But you should not be

reflexive in making methods for the sake of clean code, that is not what

programming is about, or ever was.

Long were the times understanding a machine was necessary to interact with a machine.

So long, in fact, that for the past 50 years, most of what was known about machines and

programming has been ignored and is not taught anymore.

Well then, maybe in the end, we just needed to get familiar enough with bytes to see their beauty.

Let’s end our meditation with a rant of what has brought us to this point, which was

nonetheless heavily influenced by the environment of business software, but mostly the

web-sprawl of course.

Object-oriented libraries pose themselves as fully-comprehensive vocabularies

for specific domains, and while it is nice to draw analogies and try to make sentences with

code – yielding a few lines per thousand of somewhat funny “prose” – the actual

bytes are often

lost behind getters and setters, and stylistic

choices prevail over actual functionality.

The choice in high-level languages is often between functional style, or getter-setter

style, or imperative messes with adverbial methods to reflect subjective qualities, and

neat spelled-out names that can be hugely confusing at best.

What about namespaces?

Arguably the only actual stylistic improvement on the C language, as the main mechanism

behind modern imports.

It is not uncommon, however, to find C-style library code: import *

equivalent, or worse,

from stable import stable_horse_power, StableHorse, StableHorsePower, StableHorseManager,

StableHorseManagerVersioned, among other atrocities.

Well then, what good has encapsulation brought, besides us having stupidly slow editors

that won’t work with longer files?

You might say that it is great to be able to handle logic when setting a value, so that you

can hook asynchronous updates on networked components or whatever.

I then challenge you to think about the semantics of setting a value.

If the state is shared through the network, it is expected to make the caller

perform the full network call when they need it.

Imagine an async library abstracted through a sync interface, and that all setters have

500ms latency, so that if I have a main loop to set 5000 elements it would take around 40

min to complete.

Now, in Rust this code can be perfectly safe, the compiler will optimize the hell out of

it, but it will still take 40 min to complete.

If, however, I know this fact about the sync wrapper, and I insist on using it

instead of the actual async library, I might find a helper that does set_many

and do that instead of my loop.

So what good was the abstraction?

I can no longer trust that setting values actually completes the operation, because it does

not emphatically happen in a useful amount of time.

It might have provoked a massive downtime, it might even have cost someone 40 min of

waiting because they actually waited through.

Just thinking about it gives me chills, so I’ll stop here.

Now, encapsulation might be useful for internal helper functions, but it should not be the

default, especially for data.

If you have a library that will only ever give you helper methods to access data, and you

find that you need that one field not on the API, well, good luck.

It is simply unfair, and based on the idea that “implementor knows better”, while in truth

it is impossible to decide the true use of bytes, because it is

only data.

When anyone restricts your use of their bytes, you are unfortunately using

libraries as services, and it might as well be a sea-wave emulator crypto-mining

thing that steals your computer data, because you don’t even care to go to definition and

wonder what the hell the helper is actually helping you with.

This pattern of hidden data which creates namespaces as well has led us to the

initialization epidemic, where every single struct must have initialization helpers,

oftentimes taking unuseful arguments, then performing lots of logic.

If every folder and file are namespaces, then every struct/class is its own namespace, and

structs owned by the struct also constitute their own “custom” namespaces (as field names),

we do live in namespace-hell, and more importantly compositions of this pattern have

not led us to “cleaner code”.

Code is largely getting more abstract on every new layer that we insist upon adopting, and

this is only helping us cope with the problems we ourselves have created for

maintainability and readability.

Even though there are perfectly good ways to manage “functional” codebases, we keep making

our data more nested and harder to see through.

This is directly related to the classic memory allocation problem.

Keeping track of variable lifetimes seems like a pain, but it is a pain precisely because

we don’t think about them enough, because it is not an easily observable property,

or even easily explainable for beginners.

It is, however, a most important property for any system, and any allocated memory must be

prioritized, or avoided as much as possible.

With this in mind, let’s ponder on the sacredness of the main function, which protects the

lifetimes of all our allocations.

The program can die, and eventually will, and the main function will free the computer of

our impure memory allocations, forever until it is called upon again.

Programmers that write CRUD applications will often only encounter the route

lifetime, then spawn a connection to a database from a singleton (ahem,

global), assumed to live forever as a perfect abstraction of a

resilient service that someone else wrote, under “concrete” type system guarantees of the

parser that someone else wrote, and then write weird helpers that perform

business logic, just like the code someone else wrote just above, because if it

stands out in any way the reviewer will ask for it not to, pretty please.

Then submit their contribution of 12 changes lines, plus or minus 40 formatting changes,

200 lines of codegen that no one can see a difference by naked eye, and 3 commits,

encapsulating 4h of work over the past 2 days whenever the experts about the API

were available, because god forbid they push changes to the API without consulting those

first (the experts are too busy to review any code though).

Nonetheless, metrics are good, and the 5000 engineers employed in the organization are all

happy because there have been no layoffs for the past 2 months.

What actual comprehension they are aspiring towards, no one knows.

There are way too many services that each employee has to maintain more than one, if not

owned by the company, then it is a forsaken dependency.

There are way too many classes and modules so that in the biggest of teams no one

understands a single codebase completely, or has any idea what the dependencies are

actually for, even less the degree to which they are spread.

Vulnerability concerns?

Don’t worry, our C++ dependencies are only accessible to our 5000 engineers, some of which

we plan on firing tomorrow!

By the way, could you fix it on your spare time?

If I can hope of something that is beyond my control, let it be that AWS main crashes and frees the world of all AI.

Amem.